Build audio-only experiences with the Daily API

The Daily call object can be used to build voice-first and voice-only applications. This guide covers how to:

- Set up an audio-only app with Daily

- Get to know browser-imposed audio constraints

- Apply best practices to optimize sound quality

- Implement common audio-only app feature requests

- Analyze performance issues

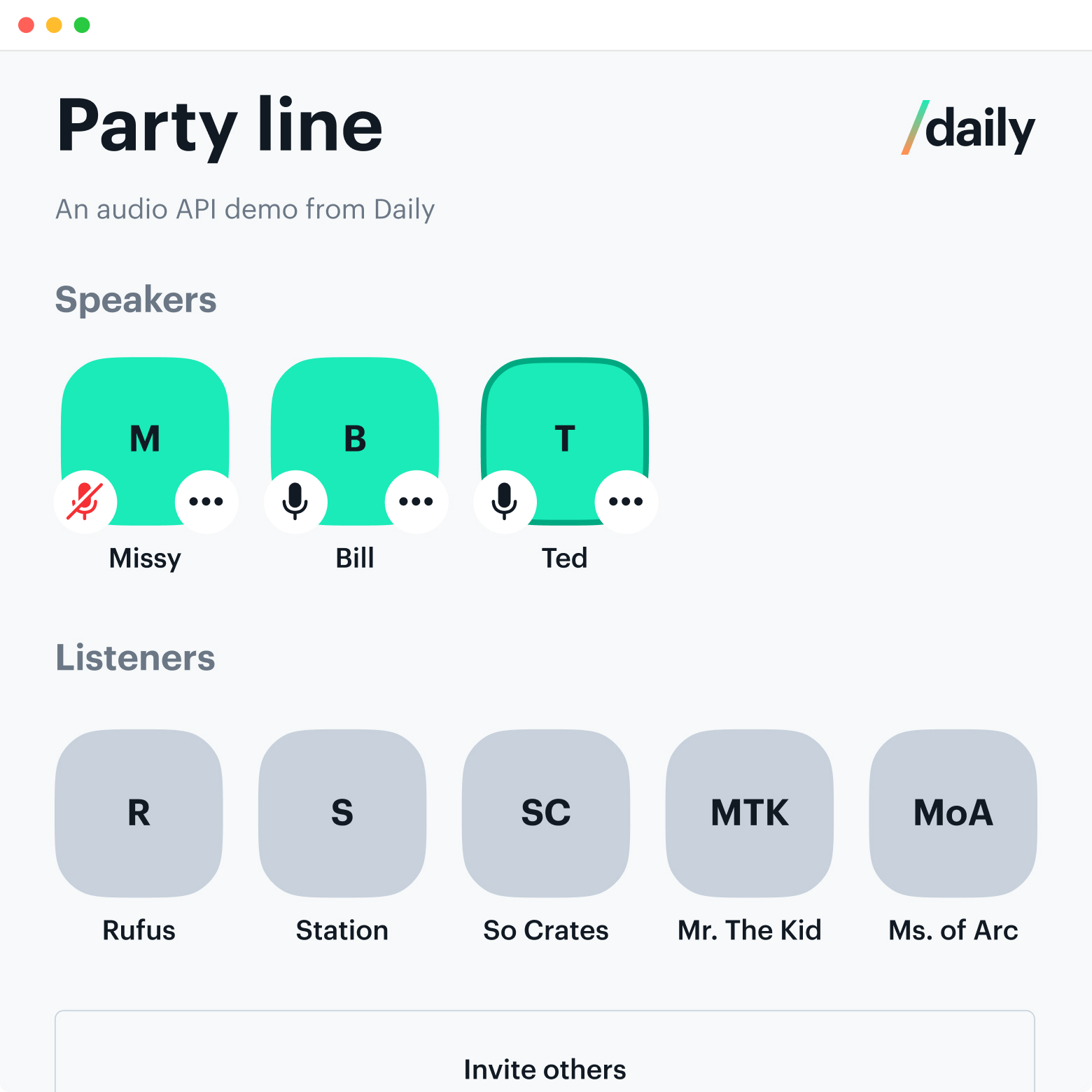

If you prefer to dive into a demo codebase, check out the monorepo for the Party Line app, with sample code for React, iOS, Android, and React Native.

Audio only pricing

Accounts are automatically configured to be billed for audio-only calls when no video tracks are present in a call. Audio-only calls are billed at a lower rate than video calls. Please see our pricing page for the most up-to-date information.

For a call to be considered audio-only, it must contain no video tracks at any point. There are several ways to ensure this:

- Start with video disabled when creating the room

- Set videoSource: false when initializing the call object

- Restrict video via meeting token permissions (set send permissions to audio-only)

- Make sure users are not able to screen share, because screen share is considered a video track

Throughout the entire duration of the call, you must also ensure that no video tracks are added. If even one participant has an active video track, the call will be classified—and billed—as a video call.

Set up an audio-only app with Daily

All Daily audio-only experiences are built on two call object properties:

videoSource: falseturns off cameras.subscribeToTracksAutomatically: falseturns on manual track subscriptions.

Turn off camera streams

The Daily call object provides low-level access to a participant's MediaStream tracks. When a Daily call object is created via createCallObject(), these streams can be manipulated.

For audio-only applications, the camera stream needs to be disabled. To do this, set the videoSource call object property to false when calling createCallObject():

No screensharing

Daily provides easy to use screensharing functionality via the startScreenShare() method. However, screen sharing is considered a video track, so we recommend not implementing screensharing in audio-only applications.

Configure manual track subscriptions

If only two participants at a time will join an audio room, then manual track subscriptions won't meaningfully improve the user experience. Skip ahead to browser constraints.

Daily calls operate on a publish/subscribe model: participants publish their own media tracks, and subscribe to other participants’ tracks. By default, Daily abstracts away the complexity of track management, and subscribes every participant to every other participant's tracks.

For audio-only applications, however, we recommend turning off that default behavior. As more participants join, subscribing a participant to all audio tracks could overwhelm the server routing all the tracks (the Selective Forwarding Unit, or SFU). The risk of unwanted background noise also increases if every participant automatically subscribes to every other participant’s audio.

Manual track subscriptions make it possible to selectively subscribe, unsubscribe, and "stage" audio tracks.

To set them up (and turn off the Daily default behavior), set the subscribeToTracksAutomatically call object property to false via createCallObject(), join(), or the setSubscribeToTracksAutomatically() method. createCallObject() and join() will initialize the call without using manual track subscriptions. setSubscribeToTracksAutomatically(), conversely, can be used throughout the call to dynamically update your settings. The latter is a good option if you want to conditionally use manual track subscriptions, such as waiting until there's a certain number of participants in the call.

Once manual track subscriptions are enabled, use the updateParticipant() or updateParticipants() method to change the subscribed value of a participant’s tracks property.

For more details on manual track subscriptions, check out our guide to scaling large calls with Daily.

There are lots of ways to manage track subscriptions directly. For example, in Daily Prebuilt, we can scale the number of total participants higher by subscribing each single participant to only eight other participant tracks at most. To do this, we identify the most recent speakers in a queue that updates when the active-speaker-change event emits. After each active-speaker-change event, the participant ID (peerId) of the new active speaker gets pushed to the top of the queue.

If you’re building an application for up to 100,000 participants, there are additional moving parts to consider. Please contact us so that we can assist you with your use case.

Get to know browser-imposed audio constraints

Up to 100,000 participants can join a Daily audio-only room, with up to 25 microphones on at a time.

Daily is built on top of the WebRTC protocol that enables real-time communication between browsers. Before two clients can exchange data like audio tracks, they need to agree on a codec to compress and then decompress the media.

Daily calls use the Opus codec, which is the standard audio codec for WebRTC calls and is supported by all modern browsers. We use the recommended settings for 'Full-band Speech', so one audio stream typically consumes around 40 kbps.

Browsers apply their own echo cancellation, noise reduction and automatic gain control, but it doesn’t take many unmuted mics for background noise to start adding up. For the best participant experience, stick to no more than ten active mics at a time.

Apply best practices to optimize sound quality

While these recommendations are in an "audio-only" guide, they apply to working with audio in general, no matter the kind of application you're building.

Decouple <audio> elements from visual components

When <audio> tags are tied to visual elements, like an avatar that represents the participant, the audio stream will only be playable when the visual element is on the screen. This can lead to unintended user experiences. For example, scrolling out of view of the current speaker to see who else is listed on the call could stop audio entirely.

Render <audio> elements separately from visual elements to maintain consistent access to audio no matter what is currently displayed on a screen.

Offload expensive processing tasks to an AudioWorklet

An AudioWorklet executes custom audio processing scripts in separate threads for low latency audio processing. This is useful for offloading expensive real-time audio related tasks. For example, Daily Prebuilt uses an AudioWorklet to detect microphone audio.

Implement common audio-only app feature requests

While none of the following features are required to build a successful audio-only application, they come up frequently enough in conversation that we wanted to share pseudocode.

Identify the current speaker

Adding a visual indicator when a participant starts talking can help sighted attendees follow a conversation.

Listening for the Daily active-speaker-change event is the best way to update an app’s UI to reflect when the current speaker has changed. Under the hood, the Daily API detects whose audio input is currently the loudest to identify the active participant, and emits active-speaker-change when that value changes.

The React Party Line source code listens for active-speaker-change to update the activeSpeakerId stored in local state. An isActive boolean on the participant’s <Avatar /> component is set to true when their ID matches the activeSpeakerId.

There are many other ways to keep track of and visualize speaker history. If you have ideas or need help, please reach out.

Create different participant roles

For situations with a few keynote speakers, moderated community forums, and other use cases, audio-only applications often need to give different participants different permissions.

In the Party Line demo app, for example, there are moderators, speakers, and listeners. Only moderators and speakers can unmute. Moderators have the ability to promote listeners to speakers (or moderators), mute speakers, and demote speakers to listeners.

Moderators, the participants who have access to all room privileges, are identified with meeting tokens that have the is_owner property set to true. A POST request to the Daily /meeting-tokens endpoint generates a meeting token.

There are many ways to implement meeting token generation. Party Line, for example, uses a serverless function to generate a token for the participant who creates a room.

In a production environment, it is recommended to set up custom endpoints that manage participant roles in a database. Then, the Daily sendAppMessage() method can be used to notify all participants when a change has been made and a new participant roster should be pulled.

To keep code client-side for demo purposes, Party Line takes a different approach. It uses sendAppMessage() to send state updates to participants, and then listens for the app-message event and handles the change client-side. We recommend handling these changes server-side for production-level apps, however.

When speakers are promoted to moderators in Party Line, they are temporarily ejected from the call, and forced to rejoin with a meeting token. This could result in a few seconds delay. A smoother transition (via a different moderator authorization pattern) could be preferred in production apps.

Allow participants to indicate when they want to speak

In audio-only calls, it's common for participants to accidentally speak over each other or need to request to speak. To aid this experience, it's common to add a "raise your hand" feature. Being able to raise your hand enables a participant to indicate they have something to say without disruption.

There are many ways to build this. You could use the app-message event to let one or multiple other participants know you'd like to speak. In the Party Line app, setUserName() was used to update a participant's username when they wanted to speak since the hand emoji was shown in the UI for the participant's username.

Analyze performance issues

Audio-only calls require relatively low bandwidth and CPU power compared to video calls. If testing results in drained CPU and stale web sockets, be sure to check if anything else could be causing a strain. For example, we've found complex CSS animations can have a noticeable impact.

To look at Daily data, use the /logs endpoint after a session ends. See full details in our logs and metrics guide.

To get a live pulse on audio bitrates and packet loss while in the call, use the getNetworkStats().

Audio stats are not available in getNetworkStats() for daily-js versions before 0.49.0.